Among the many highlights of the Ansys 2023R1 release, a standout is the availability of the fully native GPU (Graphics Processing Unit) solver in Fluent. Written from the ground up, the results of benchmark testing have shown eye-opening numbers. On a recent test of a large external vehicle body, a single A100 GPU ran five times faster than a Xeon 8380 with eighty CPU cores. Multiple GPU cards can also be combined, resulting in the performance equivalent to thousands of CPU cores at less cost to purchase, and lower costs to run.

From what we’ve seen so far, the performance benefits will mean it is only a question of time before CPU-based CFD solvers will become a thing of the past.

There are a number of benchmark results posted by Ansys for some larger case studies [such as those shown here: Unleashing the Power of Multiple GPUs for CFD Simulations (ansys.com). In this blog, we’ll focus on the performance of two smaller testcases you could run with less than 8GB of video memory. We will solve one case on a pump using multiple frames of reference and transient sliding mesh, and a secondary study calculating steady lift and drag on a generic aircraft.

Steady State Pump, 8 core laptop with NVIDIA A4000 GPU

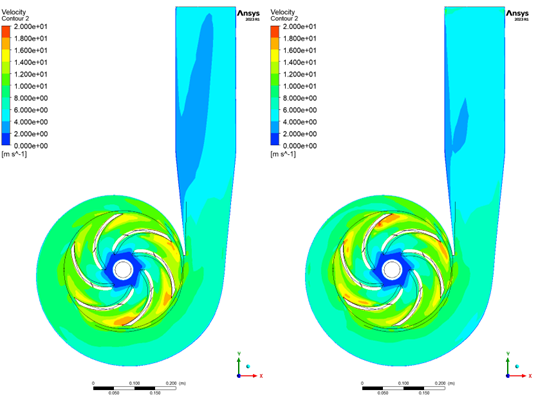

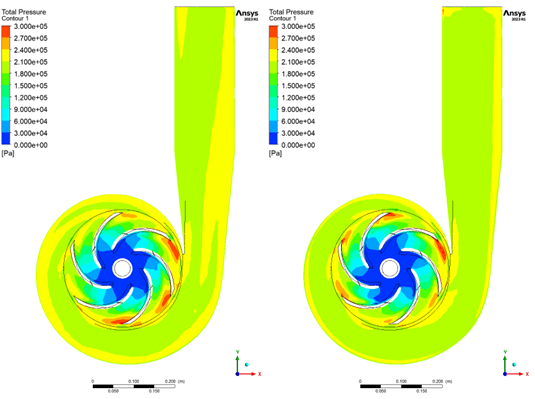

One of the first test cases we tried was a water pump using the steady-state moving reference frame (MRF). The pump was a default output from Ansys BladeModeler. The mesh was designed to be of sufficient resolution for qualitative comparison and prediction of approximate head rise, and to solve within the limits of a GPU with 8GB of memory. The mesh consisted of 2.5 million poly-hexcore cells.

One short-term limitation of the GPU solver is that a segregated pressure-velocity scheme is recommended for steady state cases. We decided to try to compute more realistic solver speed comparison by testing the more efficient coupled solver with pseudo-transient relaxation for our CPU solve, and the SIMPLE segregated solver with default under-relaxation factors for our GPU solve.

Shown below are velocity and total pressure plots. The head rise for the GPU case was 21.2m, and was 21.4m for the CPU case.

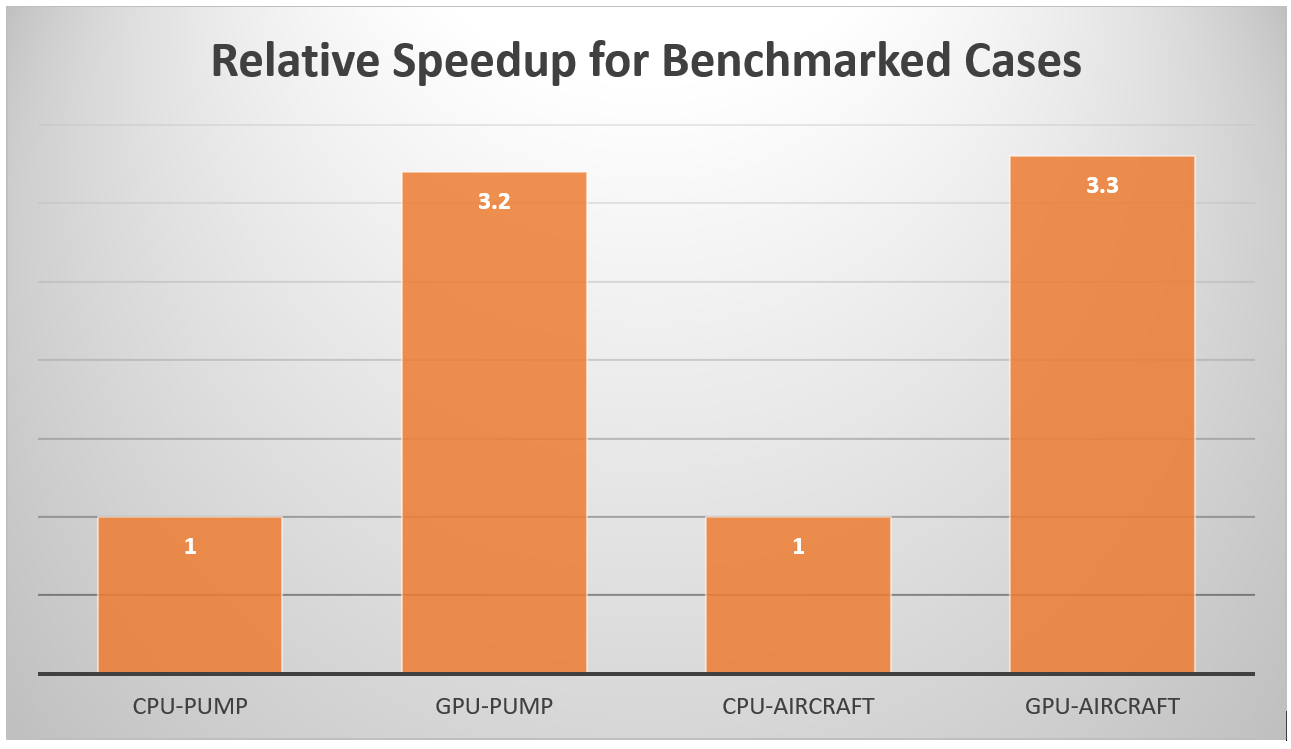

Each solution was run until the default monitor convergence for the exit pressure was satisfied. The results are shown in Table 1.

Table 1: Pump Study computation, steady state

| GPU Solve | CPU Solve | |

| Iterations | 300 | 50 |

| Time to Solve (s) | 110 | 361 |

| Speed Relative to CPU Solve | 3.3 | 1.0 |

For this case, we see a tripling in speed with the GPU solver compared with 8 CPU cores, which is a very good result considering the extra iterations required for the segregated solver. We can expect further significant gains when the coupled steady state method becomes available for the GPU solver.

Transient Pump with Sliding Mesh, 8 core laptop with NVIDIA A4000 GPU

Solving in transient with sliding mesh is still a beta feature for the GPU solver at Ansys 2023R1, so we have not yet done extensive testing. Based on one solution we ran comparing run times of the CPU solver to the GPU solver based on steady state data from the above cases, we achieved a roughly 3x speedup on the GPU, with both solvers using the SIMPLE method. See Table 2.

Table 2: Pump Study computation, transient

| GPU Solve | CPU Solve | |

| Timesteps | 80 | 80 |

| Time to Solve (s) | 963 | 3040 |

| Speed Relative to CPU Solve | 3.2 | 1.0 |

Steady State Aerodynamic lift and drag on aircraft, 8 core laptop with NVIDIA A4000 GPU

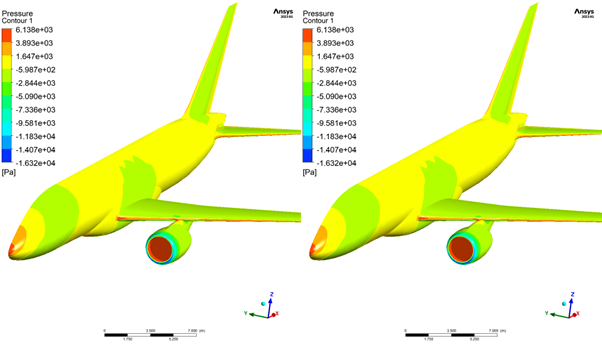

In the second study, we created a mesh containing 3.3 million polyhedral cells on a geometry similar to the one used in Fluent Aero’s half aircraft tutorial. As with the previous setup, our goal was not to refine the mesh to low y+ values, but to fit the problem on our video card and compare results between the CPU and GPU solvers to look at indicative results for lift and drag.

For this study which contained a very large domain surrounding the aircraft, the coupled non-pseudo-transient solver was the fastest way to reach steady lift and drag values in the CPU solver. The GPU used the supported SIMPLE approach.

Solution times, lift and drag for the two solutions are shown in Table 3.

Table 3: Aero Study computation, steady

| GPU Solve | CPU Solve | |

| Timesteps | 125 | 125 |

| Time to Solve (s) | 212 | 976 |

| Speed Relative to CPU Solve | 4.6 | 1.0 |

| Lift (N) | 115300 | 113500 |

| Drag (N) | 14470 | 14350 |

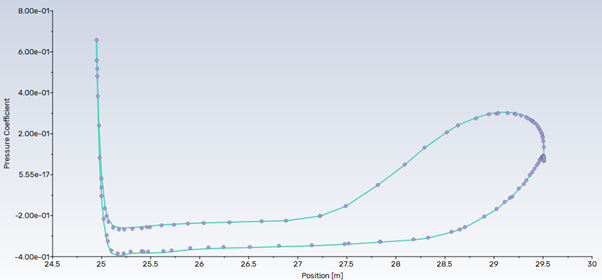

Shown below are pressure plots for both cases on the aircraft surfaces.

Figure 4 Pressure Coefficient along wing chord for the GPU (green line) and CPU (purple dots) solutions

Once again the GPU solver has shown very good agreement with the CPU solver, even when using a different solution approach, and has arrived at the answer much more quickly on the A4000 GPU than on 8 CPU cores (Intel Xeon W-11955M CPU 2.6 GHz cores).

Summary

Figure 5 shows at a glance the relative speedup of choosing to use a fairly affordable A4000 GPU, instead of a typical 8-core CPU used in engineering, for the two simulation cases we looked at in this study.

In summary, we believe the future for faster HPC firmly lies with GPU acceleration. Ansys Fluent has a unique offering that is enabling laptops like the ones used in this test to perform at the same speed as medium-sized workstations. Some patience will be needed as the many physical models available in the CPU solver are ported across in the coming years, but if you have any questions, please contact us at LEAP and we can give you advice tailored to your hardware and the type of CFD models you need to solve.